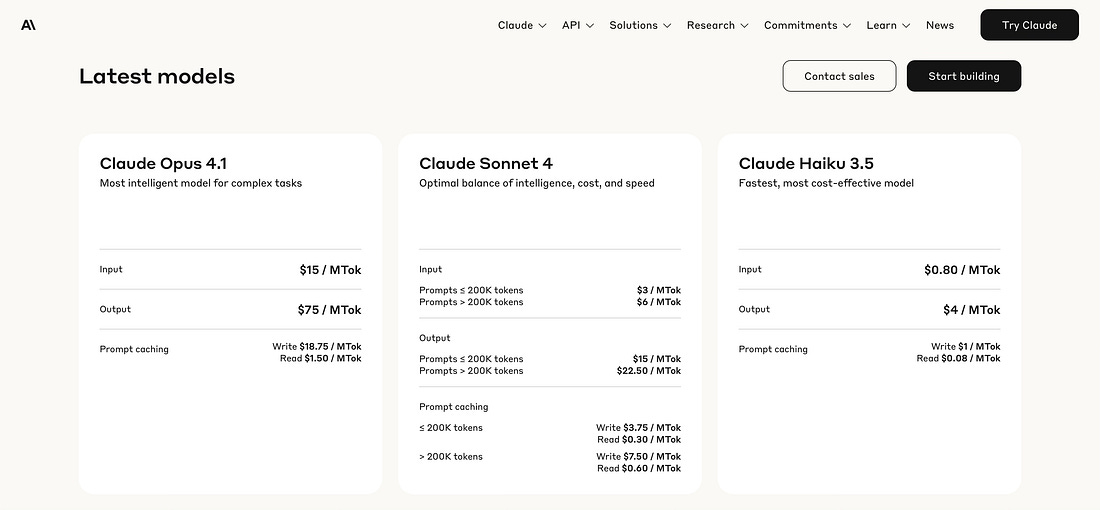

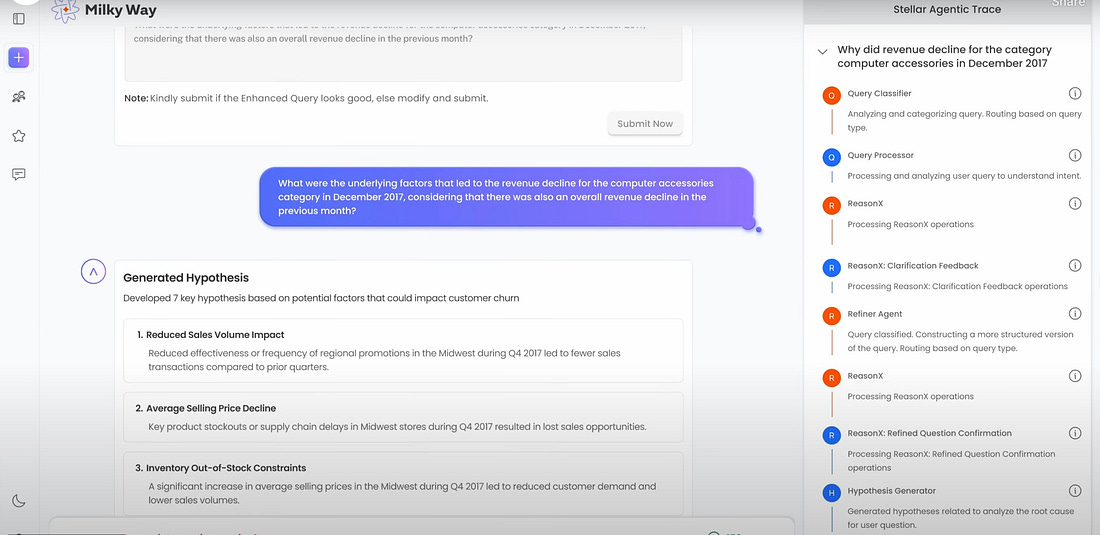

🧠 Neural Dispatch: Anthropic tokens, Perplexity’s Chrome play and using the Ray-Ban Meta AI glassesThe biggest AI developments, decoded. August 20, 2025Hello! ALGORITHMThis week, we chat about Anthropic’s big move with the Claude Sonnet 4 now able to process a million tokens for a query, AI solutions company Tredence launching what they call an “enterprise-ready constellation of agents”, and trying to figure out Perplexity’s play with a bid for Google Chrome browser. Anthropic’s 5X Context JumpThe coding battles just got a new alignment drop. Anthropic has raised the bar in a way that could force AI rivals including OpenAI to scramble. The company announced a 5X increase in Claude’s context window for Claude Sonnet 4, which is now capable of processing up to 1 million tokens for a query — essentially, an ability to digest an equivalent of thousands of pages of text in a single go. Why does this matter? Because context length is quickly becoming the new arms race metric in AI, and even more relevant for developers. For context, OpenAI’s GPT-4o supports a maximum of 128K tokens, Google’s Gemini 1.5 Pro goes up to 2M tokens (in certain settings). With this, Anthropic has planted its flag with a practical, scaled-up version. Longer contexts mean more reliable recall for enterprise tasks, since everyone is talking about AI agents and all that. Perhaps more relevant for legal firms that are using AI tools to go through reams of paper, developers for codebase analysis, and more than one line of work that has research-heavy workflows. Anthropic claims of maintaining speed and accuracy at this scale, which has historically been the bottleneck, will certainly be tested. A key takeaway? Context windows (consumers may not realise this enough) are as crucial as raw model smarts or the hardware infrastructure. Claude is already known for safety-tuned responses (more than others, the focus is clear). Now, Anthropic now looks like it’s playing on the foot front. Tredence’s Milky WayI have some opinions about Agentic AI, and perhaps you do too. For now, enterprises are only too happy to dabble with the idea, and so be it. A few weeks ago, I’d written about how continuous-learning agentic systems form the “Milky Way” of possibilities for business decision-making. That emerged from my interaction with Soumendra Mohanty, who is chief strategy officer at data science company Tredence. They are better placed than most to decode the changes the AI space is bringing to the workplace, more so because they design AI agent workflows for enterprises, across multiple verticals including healthcare, banking and telecom. Now, they’ve launched its that they say are designed to act like digital co-workers. The name? Milky Way. So, what does this mean in practice? Unlike traditional AI assistants that sit around waiting for prompts, these agents by the way they are structured, they supposedly can reason, collaborate, and execute certain tasks on their own. Tredence says that in pilot deployments, companies in retail, consumer packaged goods, telecommunications, and healthcare reported 5X improvement in time-to-insight and 50% reduction in analytics costs. The bigger shift here is philosophical. As Tredence’s CEO Shub Bhowmick puts it, “the real challenge isn’t building smarter models, it’s building systems that understand context, adapt to complexity, and drive meaningful outcomes”. That’s rare pragmatism in an era where AI bosses tend to drive the hype. The reality is, not every organisation will benefit from using AI agents, and not every role will be better off by firing the human and putting a machine in its place. What’s Perplexity going for?Perplexity has made an unsolicited $34.5 billion bid for Google Chrome. Right after, OpenAI also hinted at some interest. I’ll focus on the original move at this time — why is an AI startup trying to buy the world’s most dominant browser, and quoting a price that actually exceeds Perplexity’s own valuation several times over? If you are wondering where the money will come from, the company insists multiple “large investment funds” will finance that transaction. At first glance, it seems absurd. But dig deeper, and you see Perplexity’s playbook. Chrome stopped being just a browser many years ago, and with its approximately 3 billion user base, its potentially the largest distribution channel that a tech company can have (the Apple iPhones get close, in terms of numbers and demographic of users). For an AI company like Perplexity, even the dream of owning that scale all points to an advantage that none of their rivals would have. Aligning perfectly with their AI browser aspirations, with Comet. Here’s what I’ve been thinking (but this certainly hasn’t kept me up at night) — what if Google flips the script? Imagine a reverse Uno, with Google offering to buy Perplexity instead, absorbing its fast-growing AI-driven search platform. That could help Google hedge against AI competition, and Perplexity’s own rise. Right now, Perplexity’s bid is unlikely to succeed, but it’s a shot across the bow — a way for Perplexity to signal ambition, spark conversation, but absolutely unlikely to bait Google into making a move. PROMPTThis week, we’ll explore how to use Ray-Ban Meta smart glasses for real-time object identification and language translation — features that turn a wearable into a live AI assistant for the world around you. Ray-Ban Meta can be called smart glasses or AI glasses, whichever rolls of your tongue easier. These sunglasses, perhaps the ideal AI wearable which many of us are discovering, combine Meta’s AI assistant with an embedded camera and microphone. This allows the wearer to ask questions about what you’re seeing, and more. To get started, tap and hold the side of the glasses or say “Hey Meta” to activate the assistant. You can then ask the glasses to describe objects, people, or scenes in front of you. Three standout use cases are emerging — object recognition, on-the-fly translation, and travel assistance. For object recognition, simply look at an item and ask, “What is this?” The glasses will identify it, whether it’s a type of plant, a landmark, a nice car or a household item. For translations, look at a menu, street sign, or product packaging and ask, “Translate this into English (or another language).” The system uses AI vision and large language models to provide spoken translations through the glasses’ speakers. Travel assistance is where these two features overlap. Imagine pointing at a metro map in Paris or a restaurant board in Tokyo — the glasses can identify the location and translate the text in real time, removing friction in navigating foreign environments. To get this to work, put on the Ray-Ban Meta glasses and ensure they’re connected to the Meta AI app on your phone > activate the assistant by voice or touch > ask contextual questions like “What is this landmark?” or “Translate this sign into Hindi.” > listen to the response. In some cases, you’ll find more information in the Meta AI app. Keep in mind: Translation and object recognition features may still find some limitations depending on the language and region settings, and for this to work as it should, your phone should have an active 5G/4G or Wi-Fi connection. Only then can queries be framed conversationally, and not as isolated queries or commands. THINKING

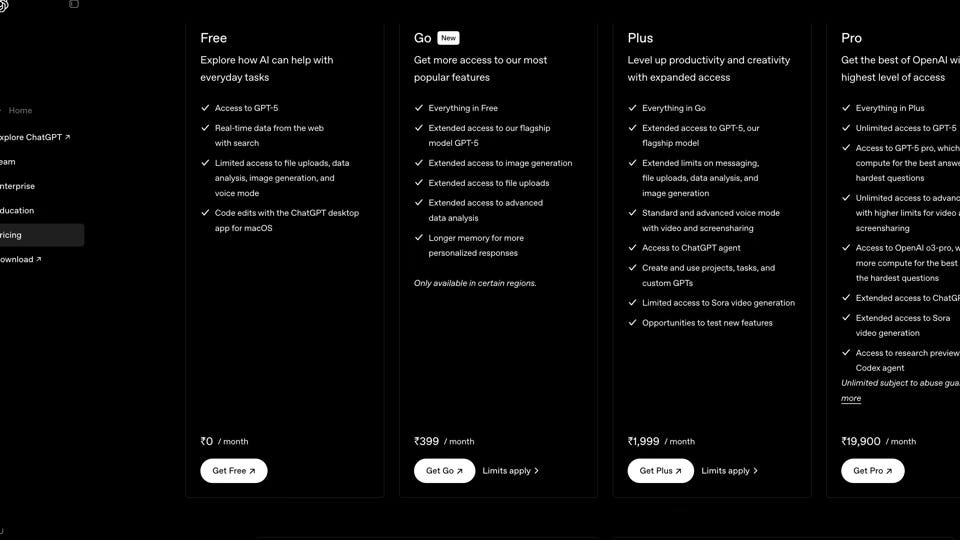

Sam Altman, OpenAI CEO, discussing AGI on CNBC's "Squawk Box," August 8, 2025. The context: Altman's pivot away from AGI (or Artificial General Intelligence) terminology comes at a particularly telling moment. It was in this June, he’d declared that we were "entering the AGI age”. Now, just days after a GPT-5 model release which didn’t match the hype, and GPT Go subscription that begins its journey from India, the OpenAI CEO is distancing himself from the very concept that has defined his company's mission (and in a way, somewhat justified its eye-watering $500 billion valuation). From, we are just a GPT-5 release away from AGI, to again being two or so years away, tells me one clear thing — science isn’t aboard the hype train that AI bosses seem to be on. A rhetoric shift that coincides suspiciously with GPT-5's lukewarm reception, with criticism being that this long-awaited model is delivering only incremental (while retaining typical AI foibles) and not revolutionary improvements over its predecessor (there was even an outcry on social media that OpenAI return the GPT-4o model, which they eventually had to). From a corporate perspective, this may be bookmarked as a strategic recalibration of expectations, but for any of us who are watching closely, this is no such thing. A reality check: Altman's newfound skepticism about AGI as a "super useful term" reveals a fundamental problem, one that in any other industry, would have probably seen some exits from a company. For years, OpenAI has raised billions by positioning AGI as its North Star, yet now acknowledges there are "multiple definitions being used by different companies and individuals." Somehow, people defining AGI is a problem. This admission exposes how the AI industry has been operating in a definitional vacuum, where goal posts can be moved whenever convenient, and never at the risk of valuations or funding. When your latest model falls short of revolutionary impact, simply redefine what you were aiming for in the first place. The shift from binary AGI thinking to "levels of progress" is signifying not just semantic gymnastics, but also defensive repositioning in the face of diminishing returns. GPT-5's modest improvements suggest we may be at the doorstep of an AI plateau, where simply throwing more compute and data at transformer architectures is yielding marginal gains. Altman seems to be preemptively managing expectations by arguing that the concept itself is flawed. We’ve seen this before, a classic tech industry playbook, be it chips, smartphones or operating systems. When a supposedly revolutionary breakthrough doesn't materialise the way it should, quickly pivot to a narrative about continuous improvement and refinement. If AGI becomes a distraction promoted by those who need to keep raising funding, we may be witnessing a potential bubble deflation in real-time. It may be prudent for seemingly gullible corporations worldwide, who have taken the words and promises of AI companies at face value, and started replacing humans who have families to take care of, with AI “copilots”. If ever a pack of cards was staring us in the face, this is it. Neural Dispatch is your weekly guide to the rapidly evolving landscape of artificial intelligence. Each edition delivers curated insights on breakthrough technologies, practical applications, and strategic implications shaping our digital future. Written and edited by Vishal Mathur. Produced by Shashwat Mohanty. |

🧠 Neural Dispatch: Anthropic tokens, Perplexity’s Chrome play and using the Ray-Ban Meta AI glasses

19:30

0